关于本指南

本指南旨在帮助记录rustc——Rust编译器的工作方式,并帮助新的参与者参与rustc开发。

本指南分为六个部分:

- 构建,调试和为rustc贡献代码:包含无论您准备如何作出贡献,这部分信息都应该是有用的,例如作出贡献的一般过程,如何构建编译器等。

- 编译器的高层架构:讨论编译器的高级架构和编译过程的各个阶段。

- 源码表示:描述了从用户那里获取源代码并将其转换为编译器可以轻松使用的各种形式的过程。

- 分析:讨论编译器用来检查代码的各种属性并通知编译过程的后期阶段(例如,类型检查)的分析过程。

- 从MIR到Binaries:如何生成链接好的可执行机器代码。

- 附录:其中有大量实用的参考信息,包括词汇表。

该指南本身当然也是开源的,可以在GitHub repository中找到本书的源码。如果您在本书中发现任何错误,请开一个issue,或更好的,开一个带有更正内容的PR!

其他信息

以下站点也可能对您来说有用:

- rustc API docs -- 编译器的rustdoc文档

- Forge -- 包含rust基础设施、团队工作流程、以及更多

- compiler-team -- rust编译器团队的基地,其中包含对团队工作流程,活动中的工作组和团队日历的描述。

第1部分:构建,调试和向rustc贡献代码

rustc-dev-guide的这一部分包含对您无论您在编译器的哪个部分上工作都很有用的知识。 这既包括 技术信息和提示(例如,如何编译和调试编译器)和Rust项目中的工作流程的信息(例如,稳定性和编译器小组的信息)。

About the compiler team

rustc is maintained by the Rust compiler team. The people who belong to this team collectively work to track regressions and implement new features. Members of the Rust compiler team are people who have made significant contributions to rustc and its design.

Discussion

Currently the compiler team chats in 2 places:

- The

t-compilerstream on the Zulip instance - The

compilerchannel on the rust-lang discord

Expert map

If you're interested in figuring out who can answer questions about a particular part of the compiler, or you'd just like to know who works on what, check out our experts directory. It contains a listing of the various parts of the compiler and a list of people who are experts on each one.

Rust compiler meeting

The compiler team has a weekly meeting where we do triage and try to generally stay on top of new bugs, regressions, and other things. They are held on Zulip. It works roughly as follows:

- Review P-high bugs: P-high bugs are those that are sufficiently important for us to actively track progress. P-high bugs should ideally always have an assignee.

- Look over new regressions: we then look for new cases where the compiler broke previously working code in the wild. Regressions are almost always marked as P-high; the major exception would be bug fixes (though even there we often aim to give warnings first).

- Check I-nominated issues: These are issues where feedback from the team is desired.

- Check for beta nominations: These are nominations of things to backport to beta.

- Possibly WG checking: A WG may give an update at this point, if there is time.

The meeting currently takes place on Thursdays at 10am Boston time (UTC-4 typically, but daylight savings time sometimes makes things complicated).

The meeting is held over a "chat medium", currently on zulip.

Team membership

Membership in the Rust team is typically offered when someone has been making significant contributions to the compiler for some time. Membership is both a recognition but also an obligation: compiler team members are generally expected to help with upkeep as well as doing reviews and other work.

If you are interested in becoming a compiler team member, the first thing to do is to start fixing some bugs, or get involved in a working group. One good way to find bugs is to look for open issues tagged with E-easy or E-mentor.

r+ rights

Once you have made a number of individual PRs to rustc, we will often offer r+ privileges. This means that you have the right to instruct "bors" (the robot that manages which PRs get landed into rustc) to merge a PR (here are some instructions for how to talk to bors).

The guidelines for reviewers are as follows:

- You are always welcome to review any PR, regardless of who it is

assigned to. However, do not r+ PRs unless:

- You are confident in that part of the code.

- You are confident that nobody else wants to review it first.

- For example, sometimes people will express a desire to review a PR before it lands, perhaps because it touches a particularly sensitive part of the code.

- Always be polite when reviewing: you are a representative of the Rust project, so it is expected that you will go above and beyond when it comes to the Code of Conduct.

high-five

Once you have r+ rights, you can also be added to the high-five

rotation. high-five is the bot that assigns incoming PRs to

reviewers. If you are added, you will be randomly selected to review

PRs. If you find you are assigned a PR that you don't feel comfortable

reviewing, you can also leave a comment like r? @so-and-so to assign

to someone else — if you don't know who to request, just write r? @nikomatsakis for reassignment and @nikomatsakis will pick someone

for you.

Getting on the high-five list is much appreciated as it lowers the review burden for all of us! However, if you don't have time to give people timely feedback on their PRs, it may be better that you don't get on the list.

Full team membership

Full team membership is typically extended once someone made many contributions to the Rust compiler over time, ideally (but not necessarily) to multiple areas. Sometimes this might be implementing a new feature, but it is also important — perhaps more important! — to have time and willingness to help out with general upkeep such as bugfixes, tracking regressions, and other less glamorous work.

如何构建并运行编译器

编译器使用 x.py 工具构建。您将需要安装Python才能运行它。 但是在此之前,如果您打算修改rustc的代码,则需要调整编译器的配置。因为默认配置面向以用户而不是开发人员来进行构建。

创建一个 config.toml

先将 config.toml.example 复制为 config.toml:

> cd $RUST_CHECKOUT

> cp config.toml.example config.toml

然后,您将需要打开这个文件并更改以下设置(根据需求不同可能也要修改其他的设置,例如llvm.ccache):

[llvm]

# Enables LLVM assertions, which will check that the LLVM bitcode generated

# by the compiler is internally consistent. These are particularly helpful

# if you edit `codegen`.

assertions = true

[rust]

# This will make your build more parallel; it costs a bit of runtime

# performance perhaps (less inlining) but it's worth it.

codegen-units = 0

# This enables full debuginfo and debug assertions. The line debuginfo is also

# enabled by `debuginfo-level = 1`. Full debuginfo is also enabled by

# `debuginfo-level = 2`. Debug assertions can also be enabled with

# `debug-assertions = true`. Note that `debug = true` will make your build

# slower, so you may want to try individually enabling debuginfo and assertions

# or enable only line debuginfo which is basically free.

debug = true

如果您已经构建过了rustc,那么您可能必须执行rm -rf build才能使配置更改生效。

请注意,./x.py clean 不会导致重新构建LLVM。

因此,如果您的配置更改影响LLVM,则在重新构建之前,您将需要手动rm -rf build /。

x.py是什么?

x.py是用于编排rustc存储库的各种构建的脚本。

该脚本可以构建文档,运行测试并编译rustc。

现在它替代了以前的makefile,是构建rustc的首选方法。下面是利用x.py来有效处理各种任务的常见方式。

本章侧重于提高生产力的基础知识,但是,如果您想了解有关x.py的更多信息,请在此处阅读其README.md。

自举

要记住的一件事是rustc是一个自举式编译器。

也就是说,由于rustc是用Rust编写的,因此我们需要使用较旧版本的编译器来编译较新的版本。

特别是,新版本的编译器以及构建该编译器所需的一些组件,例如libstd和其他工具,可能在内部使用一些unstable的特性,因此需要能使用这些unstable特性的特定版本。

因此编译rustc需要分阶段完成:

-

Stage 0:stage0中使用的编译器通常是当前最新的的beta 版本

rustc编译器及其关联的动态库(您也可以将x.py配置为使用其他版本的编译器)。 此stage 0编译器仅用于编译rustbuild,std和rustc。编译

rustc时,此stage0编译器使用新编译的std。这里有两个概念:一个编译器(及其依赖)及其“目标”或“对象”库(

std和rustc)。两者均会在此阶段出现,但以交错方式进行。 -

Stage 1:然后使用stage0编译器编译你的代码库中的代码,以生成新的stage1编译器。

但是,它是使用较旧的编译器(stage0)构建的,因此为了优化stage1编译器,我们需要进入下一阶段。

-

从理论上讲,stage1编译器在功能上与stage2编译器相同,但实际上它们存在细微差别。

特别是,stage1中使用的编译器本身是由stage0编译器构建的,而不是由您的工作目录中的源构建的。

这意味着,在编译器源代码中使用的符号名称可能与stage1编译器生成的符号名称不匹配。

这在使用动态链接时非常重要(例如,带有derive的代码)。有时这意味着某些测试在与stage1运行时不起作用。

-

-

Stage 2:我们使用stage1中得到的编译器重新构建其自身,以产生具有所有最新优化的stage2编译器。 (默认情况下,我们复制stage1中的库供stage2编译器使用,因为它们应该是相同的。)

-

(可选)Stage 3:要完全检查我们的新编译器,我们可以使用stage2编译器来构建库。除非出现故障,否则结果应与之前相同。 要了解有关自举过程的更多信息,请阅读本章。

构建编译器

要完整构建编译器,请运行./x.py build。 这将完成上述整个引导过程,并从您的源代码中生成可用的编译器工具链。 这需要很长时间,因此通常不需要真的运行这条命令(稍后会详细介绍)。

您可以将许多标志传递给x.py的build命令,这些标志可以减少编译时间或适应您可能需要更改的其他内容。 他们是:

Options:

-v, --verbose use verbose output (-vv for very verbose)

-i, --incremental use incremental compilation

--config FILE TOML configuration file for build

--build BUILD build target of the stage0 compiler

--host HOST host targets to build

--target TARGET target targets to build

--on-fail CMD command to run on failure

--stage N stage to build

--keep-stage N stage to keep without recompiling

--src DIR path to the root of the rust checkout

-j, --jobs JOBS number of jobs to run in parallel

-h, --help print this help message

对于一些hacking,通常构建stage 1编译器就足够了,但是对于最终测试和发布,则使用stage 2编译器。

./x.py check可以快速构建rust编译器。 当您执行某种“基于类型的重构”(例如重命名方法或更改某些函数的签名)时,它特别有用。

在创建了config.toml之后,就可以运行x.py。 这里有很多选项,但让我们从构建本地rust的最佳命令开始:

./x.py build -i --stage 1 src/libstd

看起来好像它仅构建libstd,但事实并非如此。该命令的作用如下:

- 使用stage0编译器构建

libstd(使用增量) - 使用stage0编译器构建

librustc(使用增量)- 这产生了stage1编译器

- 使用stage1编译器构建

libstd(不能使用增量式)

最终产品 (stage1编译器+使用该编译器构建的库)是构建其他rust程序所需要的(除非使用#![no_std]或#![no_core])。

该命令包括-i开关,该开关启用增量编译。这将用于加快该过程的前两个步骤:特别是,如果您进行了较小的更改,我们应该能够使用您上一次编译的结果来更快地生成stage1编译器。

不幸的是,不能使用增量来加速stage1库的构建。

这是因为增量仅在连续运行同一编译器两次时才起作用。

在这种情况下,我们每次都会构建一个新的stage1编译器。

因此,旧的增量结果可能不适用。

您可能会发现构建stage1 libstd对您来说是一个瓶颈 —— 但不要担心,这有一个(hacky的)解决方法。请参阅下面“推荐的工作流程”部分。

请注意,这整个命令只是为您提供完整rustc构建的一部分。完整的rustc构建(即./x.py build命令)还有很多步骤:

- 使用stage1编译器构建librustc和rustc。

- 此处生成的编译器为stage2编译器。

- 使用stage2编译器构建

libstd。 - 使用stage2编译器构建

librustdoc和其他内容。

构建特定组件

只构建 libcore 库

./x.py build src/libcore

只构建 libcore 和 libproc_macro 库

./x.py build src/libcore src/libproc_macro

只构建到 Stage 1 为止的 libcore

./x.py build src/libcore --stage 1

有时您可能只想测试您正在处理的部分是否可以编译。 使用这些命令,您可以在进行较大的构建之前进行测试,以确保它可以与编译器一起使用。 如前所示,您还可以在末尾传递标志,例如--stage。

创建一个rustup工具链

成功构建rustc之后,您在构建目录中已经创建了一堆文件。

为了实际运行生成的rustc,我们建议创建两个rustup工具链。

第一个将运行stage1编译器(上面构建的结果)。

第二个将执行stage2编译器(我们尚未构建这个编译器,但是您可能需要在某个时候构建它;例如,如果您想运行整个测试套件)。

rustup toolchain link stage1 build/<host-triple>/stage1

rustup toolchain link stage2 build/<host-triple>/stage2

<host-triple> 一般来说是以下三者之一:

- Linux:

x86_64-unknown-linux-gnu - Mac:

x86_64-apple-darwin - Windows:

x86_64-pc-windows-msvc

现在,您可以运行构建出的rustc。 如果使用-vV运行,则应该看到以-dev结尾的版本号,表示从本地环境构建的版本:

$ rustc +stage1 -vV

rustc 1.25.0-dev

binary: rustc

commit-hash: unknown

commit-date: unknown

host: x86_64-unknown-linux-gnu

release: 1.25.0-dev

LLVM version: 4.0

其他 x.py 命令

这是其他一些有用的x.py命令。我们将在其他章节中详细介绍其中一些:

- 构建:

./x.py clean– 清理构建目录 (rm -rf build也能达到这个效果,但你必须重新构建LLVM)./x.py build --stage 1– 使用stage 1 编译器构建所有东西,不止是libstd./x.py build– 构建 stage2 编译器

- 运行测试 (见 运行测试 章节):

-

./x.py test --stage 1 src/libstd– 为libstd运行#[test]测试 -

./x.py test --stage 1 src/test/ui– 运行ui测试组 -

./x.py test --stage 1 src/test/ui/const-generics- 运行ui测试组下的const-generics/子文件夹中的测试 -

./x.py test --stage 1 src/test/ui/const-generics/const-types.rs- 运行

ui测试组下的const-types.rs中的测试

- 运行

-

清理构建文件夹

有时您需要重新开始,但是通常情况并非如此。

如果您感到需要这么做,那么其实有可能是rustbuild无法正确执行,此时你应该提出一个bug来告知我们什么出错了。

如果确实需要清理所有内容,则只需运行一个命令!

./x.py clean

推荐的工作流程

完整的自举过程需要比较长的时间。 这里有三个建议能使您的生活更轻松。

Check, check,再 check

第一个工作流程在执行简单重构时非常有用,那就是持续地运行./x.py check。

这样,您只是在检查编译器是否可以编译,但这通常就是您所需要的(例如,重命名方法时)。

然后,当您实际需要运行测试时,可以运行./x.py build。

实际上,有时即使您不完全确定该代码将起作用,也可以暂时把测试放在一边。

然后,您可以继续构建重构,提交代码,并稍后再运行测试。

然后,您可以使用git bisect来精确地跟踪导致问题的提交。

这种风格的一个很好的副作用是最后能得到一组相当细粒度的提交,所有这些提交都能构建并通过测试。 这通常有助于审核代码。

使用 --keep-stage 持续构建

有时仅检查编译器是否能够编译是不够的。

一个常见的例子是您需要添加一条debug!语句来检查某些状态的值或更好地理解问题。

在这种情况下,您确实需要完整的构建。

但是,通过利用增量,您通常可以使这些构建非常快速地完成(例如,大约30秒)。

唯一的问题是,这需要一些伪造,并且可能会导致编译器无法正常工作(但是很容易检测和修复)。

所需的命令序列如下:

- 初始构建:

./x.py build -i --stage 1 src/libstd- 如 上文所述,这将在运行所有stage0命令,包括括构建一个stage1编译器与与其相兼容的

libstd, 并运行"stage 1 actions"的前几步,到“stage1 (sysroot stage1) builds libstd”为止。

- 如 上文所述,这将在运行所有stage0命令,包括括构建一个stage1编译器与与其相兼容的

- 接下来的构建:

./x.py build -i --stage 1 src/libstd --keep-stage 1- 注意我们在此添加了

--keep-stage 1flag

- 注意我们在此添加了

如前所述,-keep-stage 1的作用是我们假设可以复用旧的标准库。

如果你修改的是编译器的话,几乎总是这样:毕竟,您还没有更改标准库。

但是有时候,这是不行的:例如,如果您正在编辑编译器的“元数据”部分,

该部分控制着编译器如何将类型和其他状态编码到rlib文件中,

或者您正在编辑的部分会体现在元数据上(例如MIR的定义)。

TL;DR,使用--keep-stage 1 时您得到的结果可能会有奇怪的行为,

例如,奇怪的ICE或其他panic。

在这种情况下,您只需从命令中删除--keep-stage 1,然后重新构建。

这样应该就能解决问题了。

使用系统自带的 LLVM 构建

默认情况下,LLVM是从源代码构建的,这可能会花费大量时间。 一种替代方法是使用计算机上已经安装的LLVM。

在 config.toml中的 target一节进行配置:

[target.x86_64-unknown-linux-gnu]

llvm-config = "/path/to/llvm/llvm-7.0.1/bin/llvm-config"

我们之前已经观察到以下路径,这些路径可能与您的系统上的不同:

/usr/bin/llvm-config-8/usr/lib/llvm-8/bin/llvm-config

请注意,您需要安装LLVMFileCheck工具,该工具用于代码生成测试。

这个工具通常是LLVM内建的,但是如果您使用自己的预装LLVM,则需要以其他方式提供FileCheck。

在基于Debian的系统上,您可以安装llvm-N-tools软件包(其中N是LLVM版本号,例如llvm-8-tools)。

或者,您可以使用config.toml中的llvm-filecheck配置项指定FileCheck的路径,

也可以使用config.toml中的codegen-tests项禁用代码生成测试。

编译器自举

本小节与自举过程有关。

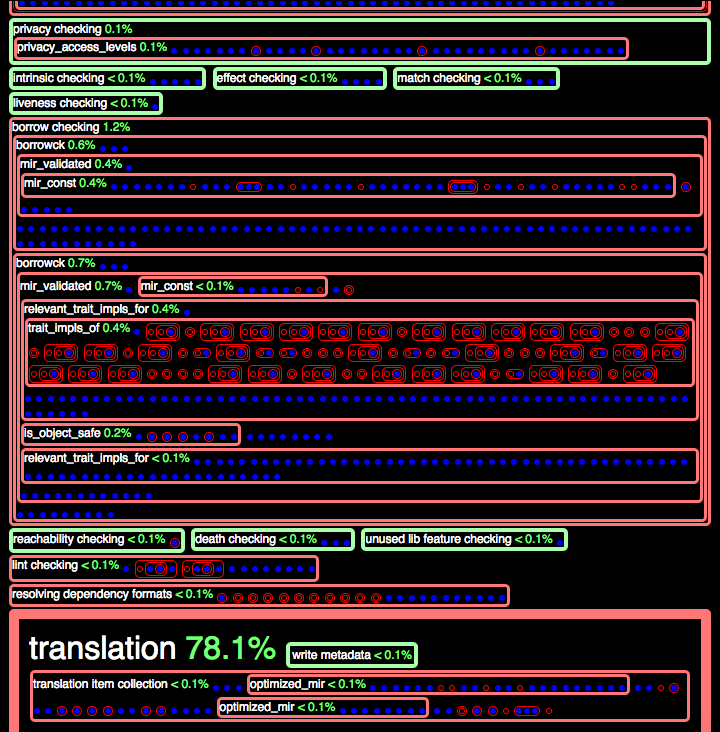

运行x.py时,您将看到如下输出:

Building stage0 std artifacts

Copying stage0 std from stage0

Building stage0 compiler artifacts

Copying stage0 rustc from stage0

Building LLVM for x86_64-apple-darwin

Building stage0 codegen artifacts

Assembling stage1 compiler

Building stage1 std artifacts

Copying stage1 std from stage1

Building stage1 compiler artifacts

Copying stage1 rustc from stage1

Building stage1 codegen artifacts

Assembling stage2 compiler

Uplifting stage1 std

Copying stage2 std from stage1

Generating unstable book md files

Building stage0 tool unstable-book-gen

Building stage0 tool rustbook

Documenting standalone

Building rustdoc for stage2

Documenting book redirect pages

Documenting stage2 std

Building rustdoc for stage1

Documenting stage2 whitelisted compiler

Documenting stage2 compiler

Documenting stage2 rustdoc

Documenting error index

Uplifting stage1 rustc

Copying stage2 rustc from stage1

Building stage2 tool error_index_generator

在这里可以更深入地了解x.py的各个阶段:

请记住,此图只是一个简化,即rustdoc可以在不同阶段构建,当传递诸如--keep-stage之类的标志或存在和宿主机类型不同的目标时,该过程会有所不同。

下表列出了各种阶段操作的输出:

| Stage 0 动作 | Output |

|---|---|

提取beta | build/HOST/stage0 |

stage0 构建 bootstrap | build/bootstrap |

stage0 构建 libstd | build/HOST/stage0-std/TARGET |

复制 stage0-std (HOST only) | build/HOST/stage0-sysroot/lib/rustlib/HOST |

stage0 使用stage0-sysroot构建 rustc | build/HOST/stage0-rustc/HOST |

复制 stage0-rustc (可执行文件除外) | build/HOST/stage0-sysroot/lib/rustlib/HOST |

构建 llvm | build/HOST/llvm |

stage0 使用stage0-sysroot构建 codegen | build/HOST/stage0-codegen/HOST |

stage0 使用stage0-sysroot构建 rustdoc | build/HOST/stage0-tools/HOST |

--stage=0 到此为止。

| Stage 1 动作 | Output |

|---|---|

复制 (提升) stage0-rustc 可执行文件到 stage1 | build/HOST/stage1/bin |

复制 (提升) stage0-codegen 到 stage1 | build/HOST/stage1/lib |

复制 (提升) stage0-sysroot 到 stage1 | build/HOST/stage1/lib |

stage1 构建 libstd | build/HOST/stage1-std/TARGET |

复制 stage1-std (HOST only) | build/HOST/stage1/lib/rustlib/HOST |

stage1 构建 rustc | build/HOST/stage1-rustc/HOST |

复制 stage1-rustc (可执行文件除外) | build/HOST/stage1/lib/rustlib/HOST |

stage1 构建 codegen | build/HOST/stage1-codegen/HOST |

--stage=1 到此为止。

| Stage 2 动作 | Output |

|---|---|

复制 (提升) stage1-rustc 可执行文件 | build/HOST/stage2/bin |

复制 (提升) stage1-sysroot | build/HOST/stage2/lib and build/HOST/stage2/lib/rustlib/HOST |

stage2 构建 libstd (除 HOST?) | build/HOST/stage2-std/TARGET |

复制 stage2-std (非 HOST 目标) | build/HOST/stage2/lib/rustlib/TARGET |

stage2 构建 rustdoc | build/HOST/stage2-tools/HOST |

复制 rustdoc | build/HOST/stage2/bin |

--stage=2 到此为止。

注意,x.py使用的约定是:

- “stage N 产品”是由stage N编译器产生的制品。

- “stage (N+1)编译器”由“stage N 产品”组成。

- “--stage N”标志表示使用stage N构建。

简而言之,stage 0使用stage0编译器创建stage0产品,随后将其提升为stage1。

每次编译任何主要产品(std和rustc)时,都会执行两个步骤。

当std由N级编译器编译时,该std将链接到由N级编译器构建的程序(包括稍后构建的rustc)。 stage (N+1)编译器还将使用它与自身链接。

如果有人认为stage (N+1)编译器“只是”我们正在使用阶段N编译器构建的另一个程序,那么这有点直观。

在某些方面,可以将rustc(二进制文件,而不是rustbuild步骤)视为少数no_core二进制文件之一。

因此,“stage0 std制品”实际上是下载的stage0编译器的输出,并且将用于stage0编译器构建的任何内容:

例如 rustc制品。 当它宣布正在“构建stage1 std制品”时,它已进入下一个自举阶段。 在以后的阶段中,这种模式仍在继续。

还要注意,根据stage的不同,构建主机std和目标std的情况有所不同(例如,在表格中看到stage2仅构建非主机std目标。

这是因为在stage2期间,主机std是从stage 1std提升过来的——

特别地,当宣布“ Building stage 1 artifacts”时,它也随后被复制到stage2中(编译器的libdir 和sysroot))。

对于编译器的任何有用的工作,这个std都是非常必要的。

具体来说,它用作新编译的编译器所编译程序的std

(因此,当您编译fn main() {}时,它将链接到使用x.py build --stage 1 src/libstd编译的最后一个std)。

由stage0编译器生成的rustc链接到新构建的libstd,这意味着在大多数情况下仅需要对std进行cfg门控,以便rustc可以立即使用添加到std的功能。

添加后,无需进入下载的Beta。由stage1/bin/rustc编译器构建的libstd,也称“stage1 std”构件,不一定与该编译器具有ABI兼容性。

也就是说,rustc二进制文件很可能无法使用此std本身。

然而,它与stage1/bin/rustc二进制文件所构建的任何程序(包括其自身)都具有ABI兼容性,因此从某种意义上讲,它们是配对的。

这也是--keep-stage 1 src/libstd起作用的地方。

由于对编译器的大多数更改实际上并未更改ABI,因此,一旦在阶段1中生成了libstd,您就可以将其与其他编译器一起使用。

如果ABI没变,那就很好了,不需要花费时间重新编译std。

--keep-stage假设先前的编译没有问题,然后将这些制品复制到适当的位置,从而跳过cargo调用。

我们首先构建std,然后构建rustc的原因基本上是因为我们要最小化rustc代码中的cfg(stage0)。

当前rustc总是与“新的”std链接,因此它不必关心std的差异。它可以假定std尽可能新。

我们需要两次构建它的原因是因为ABI兼容性。 Beta编译器具有自己的ABI,而stage1/bin/rustc编译器将使用新的ABI生成程序/库。

我们曾经要编译3次,但是由于我们假设ABI在代码库中是恒定的,

我们假定“stage2”编译器生成的库(由stage1/bin/rustc编译器产生)与stage1/bin/rustc编译器产生的库ABI兼容。

这意味着我们可以跳过最后一次编译 —— 只需使用stage2/bin/rustc编译器自身所使用的库。

这个stage2/bin/rustc编译器和stage 1 {std, rustc}一起被交付给最终用户。

环境变量

在自举过程中,使用了很多编译器内部的环境变量。 如果您尝试运行rustc的中间版本,有时可能需要手动设置其中一些环境变量。 否则,您将得到如下错误:

thread 'main' panicked at 'RUSTC_STAGE was not set: NotPresent', src/libcore/result.rs:1165:5

如果./stageN/bin/rustc给出了有关环境变量的错误,那通常意味着有些不对劲

——或者您正在尝试编译例如librustc或libstd或依赖于环境变量的东西。 在极少数情况下,您才会需要在这种情况下调用rustc,

您可以通过在x.py命令中添加以下标志来找到环境变量值:--on-fail=print-env。

Build distribution artifacts

You might want to build and package up the compiler for distribution. You’ll want to run this command to do it:

./x.py dist

Install distribution artifacts

If you’ve built a distribution artifact you might want to install it and test that it works on your target system. You’ll want to run this command:

./x.py install

Note: If you are testing out a modification to a compiler, you might want to use it to compile some project. Usually, you do not want to use ./x.py install for testing. Rather, you should create a toolchain as discussed in here.

For example, if the toolchain you created is called foo, you

would then invoke it with rustc +foo ... (where ... represents

the rest of the arguments).

Documenting rustc

You might want to build documentation of the various components available like the standard library. There’s two ways to go about this. You can run rustdoc directly on the file to make sure the HTML is correct, which is fast. Alternatively, you can build the documentation as part of the build process through x.py. Both are viable methods since documentation is more about the content.

Document everything

./x.py doc

If you want to avoid the whole Stage 2 build

./x.py doc --stage 1

First the compiler and rustdoc get built to make sure everything is okay and then it documents the files.

Document specific components

./x.py doc src/doc/book

./x.py doc src/doc/nomicon

./x.py doc src/doc/book src/libstd

Much like individual tests or building certain components you can build only the documentation you want.

Document internal rustc items

Compiler documentation is not built by default. To enable it, modify config.toml:

[build]

compiler-docs = true

Note that when enabled, documentation for internal compiler items will also be built.

Compiler Documentation

The documentation for the rust components are found at rustc doc.

ctags

One of the challenges with rustc is that the RLS can't handle it, since it's a

bootstrapping compiler. This makes code navigation difficult. One solution is to

use ctags.

ctags has a long history and several variants. Exuberant Ctags seems to be

quite commonly distributed but it does not have out-of-box Rust support. Some

distributions seem to use Universal Ctags, which is a maintained fork

and does have built-in Rust support.

The following script can be used to set up Exuberant Ctags: https://github.com/nikomatsakis/rust-etags.

ctags integrates into emacs and vim quite easily. The following can then be

used to build and generate tags:

$ rust-ctags src/lib* && ./x.py build <something>

This allows you to do "jump-to-def" with whatever functions were around when you last built, which is ridiculously useful.

The compiler testing framework

The Rust project runs a wide variety of different tests, orchestrated by

the build system (x.py test). The main test harness for testing the

compiler itself is a tool called compiletest (located in the

src/tools/compiletest directory). This section gives a brief

overview of how the testing framework is setup, and then gets into some

of the details on how to run tests as well as how to

add new tests.

Compiletest test suites

The compiletest tests are located in the tree in the src/test

directory. Immediately within you will see a series of subdirectories

(e.g. ui, run-make, and so forth). Each of those directories is

called a test suite – they house a group of tests that are run in

a distinct mode.

Here is a brief summary of the test suites and what they mean. In some cases, the test suites are linked to parts of the manual that give more details.

ui– tests that check the exact stdout/stderr from compilation and/or running the testrun-pass-valgrind– tests that ought to run with valgrindrun-fail– tests that are expected to compile but then panic during executioncompile-fail– tests that are expected to fail compilation.parse-fail– tests that are expected to fail to parsepretty– tests targeting the Rust "pretty printer", which generates valid Rust code from the ASTdebuginfo– tests that run in gdb or lldb and query the debug infocodegen– tests that compile and then test the generated LLVM code to make sure that the optimizations we want are taking effect. See LLVM docs for how to write such tests.assembly– similar tocodegentests, but verifies assembly output to make sure LLVM target backend can handle provided code.mir-opt– tests that check parts of the generated MIR to make sure we are building things correctly or doing the optimizations we expect.incremental– tests for incremental compilation, checking that when certain modifications are performed, we are able to reuse the results from previous compilations.run-make– tests that basically just execute aMakefile; the ultimate in flexibility but quite annoying to write.rustdoc– tests for rustdoc, making sure that the generated files contain the expected documentation.*-fulldeps– same as above, but indicates that the test depends on things other thanlibstd(and hence those things must be built)

Other Tests

The Rust build system handles running tests for various other things, including:

-

Tidy – This is a custom tool used for validating source code style and formatting conventions, such as rejecting long lines. There is more information in the section on coding conventions.

Example:

./x.py test tidy -

Formatting – Rustfmt is integrated with the build system to enforce uniform style across the compiler. In the CI, we check that the formatting is correct. The formatting check is also automatically run by the Tidy tool mentioned above.

Example:

./x.py fmt --checkchecks formatting an exits with an error if formatting is needed.Example:

./x.py fmtruns rustfmt on the codebase.Example:

./x.py test tidy --blessdoes formatting before doing other tidy checks. -

Unit tests – The Rust standard library and many of the Rust packages include typical Rust

#[test]unittests. Under the hood,x.pywill runcargo teston each package to run all the tests.Example:

./x.py test src/libstd -

Doc tests – Example code embedded within Rust documentation is executed via

rustdoc --test. Examples:./x.py test src/doc– Runsrustdoc --testfor all documentation insrc/doc../x.py test --doc src/libstd– Runsrustdoc --teston the standard library. -

Link checker – A small tool for verifying

hreflinks within documentation.Example:

./x.py test src/tools/linkchecker -

Dist check – This verifies that the source distribution tarball created by the build system will unpack, build, and run all tests.

Example:

./x.py test distcheck -

Tool tests – Packages that are included with Rust have all of their tests run as well (typically by running

cargo testwithin their directory). This includes things such as cargo, clippy, rustfmt, rls, miri, bootstrap (testing the Rust build system itself), etc. -

Cargo test – This is a small tool which runs

cargo teston a few significant projects (such asservo,ripgrep,tokei, etc.) just to ensure there aren't any significant regressions.Example:

./x.py test src/tools/cargotest

Testing infrastructure

When a Pull Request is opened on Github, Azure Pipelines will automatically

launch a build that will run all tests on some configurations

(x86_64-gnu-llvm-6.0 linux. x86_64-gnu-tools linux, mingw-check linux). In

essence, it runs ./x.py test after building for each of them.

The integration bot bors is used for coordinating merges to the master branch. When a PR is approved, it goes into a queue where merges are tested one at a time on a wide set of platforms using Azure Pipelines (currently over 50 different configurations). Most platforms only run the build steps, some run a restricted set of tests, only a subset run the full suite of tests (see Rust's platform tiers).

Testing with Docker images

The Rust tree includes Docker image definitions for the platforms used on Azure Pipelines in src/ci/docker. The script src/ci/docker/run.sh is used to build the Docker image, run it, build Rust within the image, and run the tests.

TODO: What is a typical workflow for testing/debugging on a platform that you don't have easy access to? Do people build Docker images and enter them to test things out?

Testing on emulators

Some platforms are tested via an emulator for architectures that aren't

readily available. There is a set of tools for orchestrating running the

tests within the emulator. Platforms such as arm-android and

arm-unknown-linux-gnueabihf are set up to automatically run the tests under

emulation on Travis. The following will take a look at how a target's tests

are run under emulation.

The Docker image for armhf-gnu includes QEMU to emulate the ARM CPU

architecture. Included in the Rust tree are the tools remote-test-client

and remote-test-server which are programs for sending test programs and

libraries to the emulator, and running the tests within the emulator, and

reading the results. The Docker image is set up to launch

remote-test-server and the build tools use remote-test-client to

communicate with the server to coordinate running tests (see

src/bootstrap/test.rs).

TODO: What are the steps for manually running tests within an emulator?

./src/ci/docker/run.sh armhf-gnuwill do everything, but takes hours to run and doesn't offer much help with interacting within the emulator.Is there any support for emulating other (non-Android) platforms, such as running on an iOS emulator?

Is there anything else interesting that can be said here about running tests remotely on real hardware?

It's also unclear to me how the wasm or asm.js tests are run.

Crater

Crater is a tool for compiling and running tests for every crate on crates.io (and a few on GitHub). It is mainly used for checking for extent of breakage when implementing potentially breaking changes and ensuring lack of breakage by running beta vs stable compiler versions.

When to run Crater

You should request a crater run if your PR makes large changes to the compiler or could cause breakage. If you are unsure, feel free to ask your PR's reviewer.

Requesting Crater Runs

The rust team maintains a few machines that can be used for running crater runs on the changes introduced by a PR. If your PR needs a crater run, leave a comment for the triage team in the PR thread. Please inform the team whether you require a "check-only" crater run, a "build only" crater run, or a "build-and-test" crater run. The difference is primarily in time; the conservative (if you're not sure) option is to go for the build-and-test run. If making changes that will only have an effect at compile-time (e.g., implementing a new trait) then you only need a check run.

Your PR will be enqueued by the triage team and the results will be posted when they are ready. Check runs will take around ~3-4 days, with the other two taking 5-6 days on average.

While crater is really useful, it is also important to be aware of a few caveats:

-

Not all code is on crates.io! There is a lot of code in repos on GitHub and elsewhere. Also, companies may not wish to publish their code. Thus, a successful crater run is not a magically green light that there will be no breakage; you still need to be careful.

-

Crater only runs Linux builds on x86_64. Thus, other architectures and platforms are not tested. Critically, this includes Windows.

-

Many crates are not tested. This could be for a lot of reasons, including that the crate doesn't compile any more (e.g. used old nightly features), has broken or flaky tests, requires network access, or other reasons.

-

Before crater can be run,

@bors tryneeds to succeed in building artifacts. This means that if your code doesn't compile, you cannot run crater.

Perf runs

A lot of work is put into improving the performance of the compiler and preventing performance regressions. A "perf run" is used to compare the performance of the compiler in different configurations for a large collection of popular crates. Different configurations include "fresh builds", builds with incremental compilation, etc.

The result of a perf run is a comparison between two versions of the compiler (by their commit hashes).

You should request a perf run if your PR may affect performance, especially if it can affect performance adversely.

Further reading

The following blog posts may also be of interest:

- brson's classic "How Rust is tested"

Running tests

You can run the tests using x.py. The most basic command – which

you will almost never want to use! – is as follows:

./x.py test

This will build the full stage 2 compiler and then run the whole test suite. You probably don't want to do this very often, because it takes a very long time, and anyway bors / travis will do it for you. (Often, I will run this command in the background after opening a PR that I think is done, but rarely otherwise. -nmatsakis)

The test results are cached and previously successful tests are

ignored during testing. The stdout/stderr contents as well as a

timestamp file for every test can be found under build/ARCH/test/.

To force-rerun a test (e.g. in case the test runner fails to notice

a change) you can simply remove the timestamp file.

Note that some tests require a Python-enabled gdb. You can test if

your gdb install supports Python by using the python command from

within gdb. Once invoked you can type some Python code (e.g.

print("hi")) followed by return and then CTRL+D to execute it.

If you are building gdb from source, you will need to configure with

--with-python=<path-to-python-binary>.

Running a subset of the test suites

When working on a specific PR, you will usually want to run a smaller set of tests, and with a stage 1 build. For example, a good "smoke test" that can be used after modifying rustc to see if things are generally working correctly would be the following:

./x.py test --stage 1 src/test/{ui,compile-fail}

This will run the ui and compile-fail test suites,

and only with the stage 1 build. Of course, the choice of test suites

is somewhat arbitrary, and may not suit the task you are doing. For

example, if you are hacking on debuginfo, you may be better off with

the debuginfo test suite:

./x.py test --stage 1 src/test/debuginfo

If you only need to test a specific subdirectory of tests for any

given test suite, you can pass that directory to x.py test:

./x.py test --stage 1 src/test/ui/const-generics

Likewise, you can test a single file by passing its path:

./x.py test --stage 1 src/test/ui/const-generics/const-test.rs

Run only the tidy script

./x.py test tidy

Run tests on the standard library

./x.py test src/libstd

Run the tidy script and tests on the standard library

./x.py test tidy src/libstd

Run tests on the standard library using a stage 1 compiler

> ./x.py test src/libstd --stage 1

By listing which test suites you want to run you avoid having to run tests for components you did not change at all.

Warning: Note that bors only runs the tests with the full stage 2 build; therefore, while the tests usually work fine with stage 1, there are some limitations.

Running an individual test

Another common thing that people want to do is to run an individual

test, often the test they are trying to fix. As mentioned earlier,

you may pass the full file path to achieve this, or alternatively one

may invoke x.py with the --test-args option:

./x.py test --stage 1 src/test/ui --test-args issue-1234

Under the hood, the test runner invokes the standard rust test runner

(the same one you get with #[test]), so this command would wind up

filtering for tests that include "issue-1234" in the name. (Thus

--test-args is a good way to run a collection of related tests.)

Editing and updating the reference files

If you have changed the compiler's output intentionally, or you are

making a new test, you can pass --bless to the test subcommand. E.g.

if some tests in src/test/ui are failing, you can run

./x.py test --stage 1 src/test/ui --bless

to automatically adjust the .stderr, .stdout or .fixed files of

all tests. Of course you can also target just specific tests with the

--test-args your_test_name flag, just like when running the tests.

Passing --pass $mode

Pass UI tests now have three modes, check-pass, build-pass and

run-pass. When --pass $mode is passed, these tests will be forced

to run under the given $mode unless the directive // ignore-pass

exists in the test file. For example, you can run all the tests in

src/test/ui as check-pass:

./x.py test --stage 1 src/test/ui --pass check

By passing --pass $mode, you can reduce the testing time. For each

mode, please see here.

Using incremental compilation

You can further enable the --incremental flag to save additional

time in subsequent rebuilds:

./x.py test --stage 1 src/test/ui --incremental --test-args issue-1234

If you don't want to include the flag with every command, you can

enable it in the config.toml, too:

# Whether to always use incremental compilation when building rustc

incremental = true

Note that incremental compilation will use more disk space than usual.

If disk space is a concern for you, you might want to check the size

of the build directory from time to time.

Running tests manually

Sometimes it's easier and faster to just run the test by hand. Most tests are

just rs files, so you can do something like

rustc +stage1 src/test/ui/issue-1234.rs

This is much faster, but doesn't always work. For example, some tests include directives that specify specific compiler flags, or which rely on other crates, and they may not run the same without those options.

Adding new tests

In general, we expect every PR that fixes a bug in rustc to come accompanied by a regression test of some kind. This test should fail in master but pass after the PR. These tests are really useful for preventing us from repeating the mistakes of the past.

To add a new test, the first thing you generally do is to create a file, typically a Rust source file. Test files have a particular structure:

- They should have some kind of comment explaining what the test is about;

- next, they can have one or more header commands, which are special comments that the test interpreter knows how to interpret.

- finally, they have the Rust source. This may have various error annotations which indicate expected compilation errors or warnings.

Depending on the test suite, there may be some other details to be aware of:

- For the

uitest suite, you need to generate reference output files.

What kind of test should I add?

It can be difficult to know what kind of test to use. Here are some rough heuristics:

- Some tests have specialized needs:

- need to run gdb or lldb? use the

debuginfotest suite - need to inspect LLVM IR or MIR IR? use the

codegenormir-opttest suites - need to run rustdoc? Prefer a

rustdoctest - need to inspect the resulting binary in some way? Then use

run-make

- need to run gdb or lldb? use the

- For most other things, a

ui(orui-fulldeps) test is to be preferred:uitests subsume both run-pass, compile-fail, and parse-fail tests- in the case of warnings or errors,

uitests capture the full output, which makes it easier to review but also helps prevent "hidden" regressions in the output

Naming your test

We have not traditionally had a lot of structure in the names of

tests. Moreover, for a long time, the rustc test runner did not

support subdirectories (it now does), so test suites like

src/test/ui have a huge mess of files in them. This is not

considered an ideal setup.

For regression tests – basically, some random snippet of code that

came in from the internet – we often name the test after the issue

plus a short description. Ideally, the test should be added to a

directory that helps identify what piece of code is being tested here

(e.g., src/test/ui/borrowck/issue-54597-reject-move-out-of-borrow-via-pat.rs)

If you've tried and cannot find a more relevant place,

the test may be added to src/test/ui/issues/.

Still, do include the issue number somewhere.

When writing a new feature, create a subdirectory to store your

tests. For example, if you are implementing RFC 1234 ("Widgets"),

then it might make sense to put the tests in a directory like

src/test/ui/rfc1234-widgets/.

In other cases, there may already be a suitable directory. (The proper directory structure to use is actually an area of active debate.)

Comment explaining what the test is about

When you create a test file, include a comment summarizing the point of the test at the start of the file. This should highlight which parts of the test are more important, and what the bug was that the test is fixing. Citing an issue number is often very helpful.

This comment doesn't have to be super extensive. Just something like "Regression test for #18060: match arms were matching in the wrong order." might already be enough.

These comments are very useful to others later on when your test breaks, since they often can highlight what the problem is. They are also useful if for some reason the tests need to be refactored, since they let others know which parts of the test were important (often a test must be rewritten because it no longer tests what is was meant to test, and then it's useful to know what it was meant to test exactly).

Header commands: configuring rustc

Header commands are special comments that the test runner knows how to

interpret. They must appear before the Rust source in the test. They

are normally put after the short comment that explains the point of

this test. For example, this test uses the // compile-flags command

to specify a custom flag to give to rustc when the test is compiled:

// Test the behavior of `0 - 1` when overflow checks are disabled.

// compile-flags: -Coverflow-checks=off

fn main() {

let x = 0 - 1;

...

}

Ignoring tests

These are used to ignore the test in some situations, which means the test won't be compiled or run.

ignore-XwhereXis a target detail or stage will ignore the test accordingly (see below)only-Xis likeignore-X, but will only run the test on that target or stageignore-prettywill not compile the pretty-printed test (this is done to test the pretty-printer, but might not always work)ignore-testalways ignores the testignore-lldbandignore-gdbwill skip a debuginfo test on that debugger.ignore-gdb-versioncan be used to ignore the test when certain gdb versions are used

Some examples of X in ignore-X:

- Architecture:

aarch64,arm,asmjs,mips,wasm32,x86_64,x86, ... - OS:

android,emscripten,freebsd,ios,linux,macos,windows, ... - Environment (fourth word of the target triple):

gnu,msvc,musl. - Pointer width:

32bit,64bit. - Stage:

stage0,stage1,stage2.

Other Header Commands

Here is a list of other header commands. This list is not

exhaustive. Header commands can generally be found by browsing the

TestProps structure found in header.rs from the compiletest

source.

run-rustfixfor UI tests, indicates that the test produces structured suggestions. The test writer should create a.fixedfile, which contains the source with the suggestions applied. When the test is run, compiletest first checks that the correct lint/warning is generated. Then, it applies the suggestion and compares against.fixed(they must match). Finally, the fixed source is compiled, and this compilation is required to succeed. The.fixedfile can also be generated automatically with the--blessoption, described in this section.min-gdb-versionspecifies the minimum gdb version required for this test; see alsoignore-gdb-versionmin-lldb-versionspecifies the minimum lldb version required for this testrust-lldbcauses the lldb part of the test to only be run if the lldb in use contains the Rust pluginno-system-llvmcauses the test to be ignored if the system llvm is usedmin-llvm-versionspecifies the minimum llvm version required for this testmin-system-llvm-versionspecifies the minimum system llvm version required for this test; the test is ignored if the system llvm is in use and it doesn't meet the minimum version. This is useful when an llvm feature has been backported to rust-llvmignore-llvm-versioncan be used to skip the test when certain LLVM versions are used. This takes one or two arguments; the first argument is the first version to ignore. If no second argument is given, all subsequent versions are ignored; otherwise, the second argument is the last version to ignore.build-passfor UI tests, indicates that the test is supposed to successfully compile and link, as opposed to the default where the test is supposed to error out.compile-flagspasses extra command-line args to the compiler, e.g.compile-flags -gwhich forces debuginfo to be enabled.should-failindicates that the test should fail; used for "meta testing", where we test the compiletest program itself to check that it will generate errors in appropriate scenarios. This header is ignored for pretty-printer tests.gate-test-XwhereXis a feature marks the test as "gate test" for feature X. Such tests are supposed to ensure that the compiler errors when usage of a gated feature is attempted without the proper#![feature(X)]tag. Each unstable lang feature is required to have a gate test.

Error annotations

Error annotations specify the errors that the compiler is expected to emit. They are "attached" to the line in source where the error is located.

~: Associates the following error level and message with the current line~|: Associates the following error level and message with the same line as the previous comment~^: Associates the following error level and message with the previous line. Each caret (^) that you add adds a line to this, so~^^^^^^^is seven lines up.

The error levels that you can have are:

ERRORWARNINGNOTEHELPandSUGGESTION*

* Note: SUGGESTION must follow immediately after HELP.

Revisions

Certain classes of tests support "revisions" (as of the time of this writing, this includes compile-fail, run-fail, and incremental, though incremental tests are somewhat different). Revisions allow a single test file to be used for multiple tests. This is done by adding a special header at the top of the file:

#![allow(unused_variables)] fn main() { // revisions: foo bar baz }

This will result in the test being compiled (and tested) three times,

once with --cfg foo, once with --cfg bar, and once with --cfg baz. You can therefore use #[cfg(foo)] etc within the test to tweak

each of these results.

You can also customize headers and expected error messages to a particular

revision. To do this, add [foo] (or bar, baz, etc) after the //

comment, like so:

#![allow(unused_variables)] fn main() { // A flag to pass in only for cfg `foo`: //[foo]compile-flags: -Z verbose #[cfg(foo)] fn test_foo() { let x: usize = 32_u32; //[foo]~ ERROR mismatched types } }

Note that not all headers have meaning when customized to a revision.

For example, the ignore-test header (and all "ignore" headers)

currently only apply to the test as a whole, not to particular

revisions. The only headers that are intended to really work when

customized to a revision are error patterns and compiler flags.

Guide to the UI tests

The UI tests are intended to capture the compiler's complete output,

so that we can test all aspects of the presentation. They work by

compiling a file (e.g., ui/hello_world/main.rs),

capturing the output, and then applying some normalization (see

below). This normalized result is then compared against reference

files named ui/hello_world/main.stderr and

ui/hello_world/main.stdout. If either of those files doesn't exist,

the output must be empty (that is actually the case for

this particular test). If the test run fails, we will print out

the current output, but it is also saved in

build/<target-triple>/test/ui/hello_world/main.stdout (this path is

printed as part of the test failure message), so you can run diff

and so forth.

Tests that do not result in compile errors

By default, a UI test is expected not to compile (in which case,

it should contain at least one //~ ERROR annotation). However, you

can also make UI tests where compilation is expected to succeed, and

you can even run the resulting program. Just add one of the following

header commands:

// check-pass- compilation should succeed but skip codegen (which is expensive and isn't supposed to fail in most cases)// build-pass– compilation and linking should succeed but do not run the resulting binary// run-pass– compilation should succeed and we should run the resulting binary

Normalization

The normalization applied is aimed at eliminating output difference between platforms, mainly about filenames:

- the test directory is replaced with

$DIR - all backslashes (

\) are converted to forward slashes (/) (for Windows) - all CR LF newlines are converted to LF

Sometimes these built-in normalizations are not enough. In such cases, you may provide custom normalization rules using the header commands, e.g.

#![allow(unused_variables)] fn main() { // normalize-stdout-test: "foo" -> "bar" // normalize-stderr-32bit: "fn\(\) \(32 bits\)" -> "fn\(\) \($$PTR bits\)" // normalize-stderr-64bit: "fn\(\) \(64 bits\)" -> "fn\(\) \($$PTR bits\)" }

This tells the test, on 32-bit platforms, whenever the compiler writes

fn() (32 bits) to stderr, it should be normalized to read fn() ($PTR bits)

instead. Similar for 64-bit. The replacement is performed by regexes using

default regex flavor provided by regex crate.

The corresponding reference file will use the normalized output to test both 32-bit and 64-bit platforms:

...

|

= note: source type: fn() ($PTR bits)

= note: target type: u16 (16 bits)

...

Please see ui/transmute/main.rs and main.stderr for a

concrete usage example.

Besides normalize-stderr-32bit and -64bit, one may use any target

information or stage supported by ignore-X here as well (e.g.

normalize-stderr-windows or simply normalize-stderr-test for unconditional

replacement).

compiletest

Introduction

compiletest is the main test harness of the Rust test suite. It allows

test authors to organize large numbers of tests (the Rust compiler has many

thousands), efficient test execution (parallel execution is supported), and

allows the test author to configure behavior and expected results of both

individual and groups of tests.

compiletest tests may check test code for success, for failure or in some

cases, even failure to compile. Tests are typically organized as a Rust source

file with annotations in comments before and/or within the test code, which

serve to direct compiletest on if or how to run the test, what behavior to

expect, and more. If you are unfamiliar with the compiler testing framework,

see this chapter for additional background.

The tests themselves are typically (but not always) organized into

"suites" – for example, run-fail,

a folder holding tests that should compile successfully,

but return a failure (non-zero status), compile-fail, a folder holding tests

that should fail to compile, and many more. The various suites are defined in

src/tools/compiletest/src/common.rs in the pub enum Mode

declaration. And a very good introduction to the different suites of compiler

tests along with details about them can be found in Adding new

tests.

Adding a new test file

Briefly, simply create your new test in the appropriate location under

src/test. No registration of test files is necessary as compiletest

will scan the src/test subfolder recursively, and will execute any Rust

source files it finds as tests. See Adding new tests

for a complete guide on how to adding new tests.

Header Commands

Source file annotations which appear in comments near the top of the source

file before any test code are known as header commands. These commands can

instruct compiletest to ignore this test, set expectations on whether it is

expected to succeed at compiling, or what the test's return code is expected to

be. Header commands (and their inline counterparts, Error Info commands) are

described more fully

here.

Adding a new header command

Header commands are defined in the TestProps struct in

src/tools/compiletest/src/header.rs. At a high level, there are

dozens of test properties defined here, all set to default values in the

TestProp struct's impl block. Any test can override this default value by

specifying the property in question as header command as a comment (//) in

the test source file, before any source code.

Using a header command

Here is an example, specifying the must-compile-successfully header command,

which takes no arguments, followed by the failure-status header command,

which takes a single argument (which, in this case is a value of 1).

failure-status is instructing compiletest to expect a failure status of 1

(rather than the current Rust default of 101). The header command and

the argument list (if present) are typically separated by a colon:

// must-compile-successfully

// failure-status: 1

#![feature(termination_trait)]

use std::io::{Error, ErrorKind};

fn main() -> Result<(), Box<Error>> {

Err(Box::new(Error::new(ErrorKind::Other, "returned Box<Error> from main()")))

}

Adding a new header command property

One would add a new header command if there is a need to define some test property or behavior on an individual, test-by-test basis. A header command property serves as the header command's backing store (holds the command's current value) at runtime.

To add a new header command property:

1. Look for the pub struct TestProps declaration in

src/tools/compiletest/src/header.rs and add the new public

property to the end of the declaration.

2. Look for the impl TestProps implementation block immediately following

the struct declaration and initialize the new property to its default

value.

Adding a new header command parser

When compiletest encounters a test file, it parses the file a line at a time

by calling every parser defined in the Config struct's implementation block,

also in src/tools/compiletest/src/header.rs (note the Config

struct's declaration block is found in

src/tools/compiletest/src/common.rs. TestProps's load_from()

method will try passing the current line of text to each parser, which, in turn

typically checks to see if the line begins with a particular commented (//)

header command such as // must-compile-successfully or // failure-status.

Whitespace after the comment marker is optional.

Parsers will override a given header command property's default value merely by being specified in the test file as a header command or by having a parameter value specified in the test file, depending on the header command.

Parsers defined in impl Config are typically named parse_<header_command>

(note kebab-case <header-command> transformed to snake-case

<header_command>). impl Config also defines several 'low-level' parsers

which make it simple to parse common patterns like simple presence or not

(parse_name_directive()), header-command:parameter(s)

(parse_name_value_directive()), optional parsing only if a particular cfg

attribute is defined (has_cfg_prefix()) and many more. The low-level parsers

are found near the end of the impl Config block; be sure to look through them

and their associated parsers immediately above to see how they are used to

avoid writing additional parsing code unnecessarily.

As a concrete example, here is the implementation for the

parse_failure_status() parser, in

src/tools/compiletest/src/header.rs:

@@ -232,6 +232,7 @@ pub struct TestProps {

// customized normalization rules

pub normalize_stdout: Vec<(String, String)>,

pub normalize_stderr: Vec<(String, String)>,

+ pub failure_status: i32,

}

impl TestProps {

@@ -260,6 +261,7 @@ impl TestProps {

run_pass: false,

normalize_stdout: vec![],

normalize_stderr: vec![],

+ failure_status: 101,

}

}

@@ -383,6 +385,10 @@ impl TestProps {

if let Some(rule) = config.parse_custom_normalization(ln, "normalize-stderr") {

self.normalize_stderr.push(rule);

}

+

+ if let Some(code) = config.parse_failure_status(ln) {

+ self.failure_status = code;

+ }

});

for key in &["RUST_TEST_NOCAPTURE", "RUST_TEST_THREADS"] {

@@ -488,6 +494,13 @@ impl Config {

self.parse_name_directive(line, "pretty-compare-only")

}

+ fn parse_failure_status(&self, line: &str) -> Option<i32> {

+ match self.parse_name_value_directive(line, "failure-status") {

+ Some(code) => code.trim().parse::<i32>().ok(),

+ _ => None,

+ }

+ }

Implementing the behavior change

When a test invokes a particular header command, it is expected that some

behavior will change as a result. What behavior, obviously, will depend on the

purpose of the header command. In the case of failure-status, the behavior

that changes is that compiletest expects the failure code defined by the

header command invoked in the test, rather than the default value.

Although specific to failure-status (as every header command will have a

different implementation in order to invoke behavior change) perhaps it is

helpful to see the behavior change implementation of one case, simply as an

example. To implement failure-status, the check_correct_failure_status()

function found in the TestCx implementation block, located in

src/tools/compiletest/src/runtest.rs,

was modified as per below:

@@ -295,11 +295,14 @@ impl<'test> TestCx<'test> {

}

fn check_correct_failure_status(&self, proc_res: &ProcRes) {

- // The value the rust runtime returns on failure

- const RUST_ERR: i32 = 101;

- if proc_res.status.code() != Some(RUST_ERR) {

+ let expected_status = Some(self.props.failure_status);

+ let received_status = proc_res.status.code();

+

+ if expected_status != received_status {

self.fatal_proc_rec(

- &format!("failure produced the wrong error: {}", proc_res.status),

+ &format!("Error: expected failure status ({:?}) but received status {:?}.",

+ expected_status,

+ received_status),

proc_res,

);

}

@@ -320,7 +323,6 @@ impl<'test> TestCx<'test> {

);

let proc_res = self.exec_compiled_test();

-

if !proc_res.status.success() {

self.fatal_proc_rec("test run failed!", &proc_res);

}

@@ -499,7 +501,6 @@ impl<'test> TestCx<'test> {

expected,

actual

);

- panic!();

}

}

Note the use of self.props.failure_status to access the header command

property. In tests which do not specify the failure status header command,

self.props.failure_status will evaluate to the default value of 101 at the

time of this writing. But for a test which specifies a header command of, for

example, // failure-status: 1, self.props.failure_status will evaluate to

1, as parse_failure_status() will have overridden the TestProps default

value, for that test specifically.

Walkthrough: a typical contribution

There are a lot of ways to contribute to the rust compiler, including fixing bugs, improving performance, helping design features, providing feedback on existing features, etc. This chapter does not claim to scratch the surface. Instead, it walks through the design and implementation of a new feature. Not all of the steps and processes described here are needed for every contribution, and I will try to point those out as they arise.

In general, if you are interested in making a contribution and aren't sure where to start, please feel free to ask!

Overview

The feature I will discuss in this chapter is the ? Kleene operator for

macros. Basically, we want to be able to write something like this:

macro_rules! foo {

($arg:ident $(, $optional_arg:ident)?) => {

println!("{}", $arg);

$(

println!("{}", $optional_arg);

)?

}

}

fn main() {

let x = 0;

foo!(x); // ok! prints "0"

foo!(x, x); // ok! prints "0 0"

}

So basically, the $(pat)? matcher in the macro means "this pattern can occur

0 or 1 times", similar to other regex syntaxes.

There were a number of steps to go from an idea to stable rust feature. Here is a quick list. We will go through each of these in order below. As I mentioned before, not all of these are needed for every type of contribution.

- Idea discussion/Pre-RFC A Pre-RFC is an early draft or design discussion of a feature. This stage is intended to flesh out the design space a bit and get a grasp on the different merits and problems with an idea. It's a great way to get early feedback on your idea before presenting it the wider audience. You can find the original discussion here.

- RFC This is when you formally present your idea to the community for consideration. You can find the RFC here.

- Implementation Implement your idea unstably in the compiler. You can find the original implementation here.

- Possibly iterate/refine As the community gets experience with your

feature on the nightly compiler and in

libstd, there may be additional feedback about design choice that might be adjusted. This particular feature went through a number of iterations. - Stabilization When your feature has baked enough, a rust team member may propose to stabilize it. If there is consensus, this is done.

- Relax Your feature is now a stable rust feature!

Pre-RFC and RFC

NOTE: In general, if you are not proposing a new feature or substantial change to rust or the ecosystem, you don't need to follow the RFC process. Instead, you can just jump to implementation.

You can find the official guidelines for when to open an RFC here.

An RFC is a document that describes the feature or change you are proposing in detail. Anyone can write an RFC; the process is the same for everyone, including rust team members.

To open an RFC, open a PR on the rust-lang/rfcs repo on GitHub. You can find detailed instructions in the README.

Before opening an RFC, you should do the research to "flesh out" your idea. Hastily-proposed RFCs tend not to be accepted. You should generally have a good description of the motivation, impact, disadvantages, and potential interactions with other features.

If that sounds like a lot of work, it's because it is. But no fear! Even if you're not a compiler hacker, you can get great feedback by doing a pre-RFC. This is an informal discussion of the idea. The best place to do this is internals.rust-lang.org. Your post doesn't have to follow any particular structure. It doesn't even need to be a cohesive idea. Generally, you will get tons of feedback that you can integrate back to produce a good RFC.

(Another pro-tip: try searching the RFCs repo and internals for prior related ideas. A lot of times an idea has already been considered and was either rejected or postponed to be tried again later. This can save you and everybody else some time)

In the case of our example, a participant in the pre-RFC thread pointed out a syntax ambiguity and a potential resolution. Also, the overall feedback seemed positive. In this case, the discussion converged pretty quickly, but for some ideas, a lot more discussion can happen (e.g. see this RFC which received a whopping 684 comments!). If that happens, don't be discouraged; it means the community is interested in your idea, but it perhaps needs some adjustments.

The RFC for our ? macro feature did receive some discussion on the RFC thread

too. As with most RFCs, there were a few questions that we couldn't answer by

discussion: we needed experience using the feature to decide. Such questions

are listed in the "Unresolved Questions" section of the RFC. Also, over the

course of the RFC discussion, you will probably want to update the RFC document

itself to reflect the course of the discussion (e.g. new alternatives or prior

work may be added or you may decide to change parts of the proposal itself).

In the end, when the discussion seems to reach a consensus and die down a bit, a rust team member may propose to move to "final comment period" (FCP) with one of three possible dispositions. This means that they want the other members of the appropriate teams to review and comment on the RFC. More discussion may ensue, which may result in more changes or unresolved questions being added. At some point, when everyone is satisfied, the RFC enters the FCP, which is the last chance for people to bring up objections. When the FCP is over, the disposition is adopted. Here are the three possible dispositions:

- Merge: accept the feature. Here is the proposal to merge for our

?macro feature. - Close: this feature in its current form is not a good fit for rust. Don't be discouraged if this happens to your RFC, and don't take it personally. This is not a reflection on you, but rather a community decision that rust will go a different direction.

- Postpone: there is interest in going this direction but not at the moment. This happens most often because the appropriate rust team doesn't have the bandwidth to shepherd the feature through the process to stabilization. Often this is the case when the feature doesn't fit into the team's roadmap. Postponed ideas may be revisited later.

When an RFC is merged, the PR is merged into the RFCs repo. A new tracking

issue is created in the rust-lang/rust repo to track progress on the feature

and discuss unresolved questions, implementation progress and blockers, etc.

Here is the tracking issue on for our ? macro feature.

Implementation

To make a change to the compiler, open a PR against the rust-lang/rust repo.

Depending on the feature/change/bug fix/improvement, implementation may be relatively-straightforward or it may be a major undertaking. You can always ask for help or mentorship from more experienced compiler devs. Also, you don't have to be the one to implement your feature; but keep in mind that if you don't it might be a while before someone else does.

For the ? macro feature, I needed to go understand the relevant parts of

macro expansion in the compiler. Personally, I find that improving the

comments in the code is a helpful way of making sure I understand

it, but you don't have to do that if you don't want to.

I then implemented the original feature, as described in the RFC. When

a new feature is implemented, it goes behind a feature gate, which means that

you have to use #![feature(my_feature_name)] to use the feature. The feature

gate is removed when the feature is stabilized.

Most bug fixes and improvements don't require a feature gate. You can just make your changes/improvements.

When you open a PR on the rust-lang/rust, a bot will assign your PR to a

review. If there is a particular rust team member you are working with, you can

request that reviewer by leaving a comment on the thread with r? @reviewer-github-id (e.g. r? @eddyb). If you don't know who to request,

don't request anyone; the bot will assign someone automatically.

The reviewer may request changes before they approve your PR. Feel free to ask questions or discuss things you don't understand or disagree with. However, recognize that the PR won't be merged unless someone on the rust team approves it.

When your review approves the PR, it will go into a queue for yet another bot

called @bors. @bors manages the CI build/merge queue. When your PR reaches

the head of the @bors queue, @bors will test out the merge by running all

tests against your PR on Travis CI. This takes a lot of time to

finish. If all tests pass, the PR is merged and becomes part of the next

nightly compiler!

There are a couple of things that may happen for some PRs during the review process

- If the change is substantial enough, the reviewer may request an FCP on the PR. This gives all members of the appropriate team a chance to review the changes.

- If the change may cause breakage, the reviewer may request a crater run. This compiles the compiler with your changes and then attempts to compile all crates on crates.io with your modified compiler. This is a great smoke test to check if you introduced a change to compiler behavior that affects a large portion of the ecosystem.

- If the diff of your PR is large or the reviewer is busy, your PR may have some merge conflicts with other PRs that happen to get merged first. You should fix these merge conflicts using the normal git procedures.

If you are not doing a new feature or something like that (e.g. if you are fixing a bug), then that's it! Thanks for your contribution :)

Refining your implementation

As people get experience with your new feature on nightly, slight changes may

be proposed and unresolved questions may become resolved. Updates/changes go

through the same process for implementing any other changes, as described

above (i.e. submit a PR, go through review, wait for @bors, etc).

Some changes may be major enough to require an FCP and some review by rust team members.

For the ? macro feature, we went through a few different iterations after the

original implementation: 1, 2, 3.

Along the way, we decided that ? should not take a separator, which was

previously an unresolved question listed in the RFC. We also changed the

disambiguation strategy: we decided to remove the ability to use ? as a

separator token for other repetition operators (e.g. + or *). However,

since this was a breaking change, we decided to do it over an edition boundary.

Thus, the new feature can be enabled only in edition 2018. These deviations

from the original RFC required another

FCP.

Stabilization

Finally, after the feature had baked for a while on nightly, a language team member moved to stabilize it.

A stabilization report needs to be written that includes

- brief description of the behavior and any deviations from the RFC

- which edition(s) are affected and how

- links to a few tests to show the interesting aspects

The stabilization report for our feature is here.

After this, a PR is made to remove the feature gate, enabling the feature by default (on the 2018 edition). A note is added to the Release notes about the feature.

Steps to stabilize the feature can be found at Stabilizing Features.

Rustc Bug Fix Procedure

This page defines the best practices procedure for making bug fixes or soundness corrections in the compiler that can cause existing code to stop compiling. This text is based on RFC 1589.

Motivation

From time to time, we encounter the need to make a bug fix, soundness correction, or other change in the compiler which will cause existing code to stop compiling. When this happens, it is important that we handle the change in a way that gives users of Rust a smooth transition. What we want to avoid is that existing programs suddenly stop compiling with opaque error messages: we would prefer to have a gradual period of warnings, with clear guidance as to what the problem is, how to fix it, and why the change was made. This RFC describes the procedure that we have been developing for handling breaking changes that aims to achieve that kind of smooth transition.

One of the key points of this policy is that (a) warnings should be issued initially rather than hard errors if at all possible and (b) every change that causes existing code to stop compiling will have an associated tracking issue. This issue provides a point to collect feedback on the results of that change. Sometimes changes have unexpectedly large consequences or there may be a way to avoid the change that was not considered. In those cases, we may decide to change course and roll back the change, or find another solution (if warnings are being used, this is particularly easy to do).

What qualifies as a bug fix?

Note that this RFC does not try to define when a breaking change is permitted. That is already covered under RFC 1122. This document assumes that the change being made is in accordance with those policies. Here is a summary of the conditions from RFC 1122:

- Soundness changes: Fixes to holes uncovered in the type system.

- Compiler bugs: Places where the compiler is not implementing the specified semantics found in an RFC or lang-team decision.

- Underspecified language semantics: Clarifications to grey areas where the compiler behaves inconsistently and no formal behavior had been previously decided.

Please see the RFC for full details!

Detailed design

The procedure for making a breaking change is as follows (each of these steps is described in more detail below):

- Do a crater run to assess the impact of the change.

- Make a special tracking issue dedicated to the change.

- Do not report an error right away. Instead, issue forwards-compatibility

lint warnings.

- Sometimes this is not straightforward. See the text below for suggestions on different techniques we have employed in the past.

- For cases where warnings are infeasible:

- Report errors, but make every effort to give a targeted error message that directs users to the tracking issue

- Submit PRs to all known affected crates that fix the issue

- or, at minimum, alert the owners of those crates to the problem and direct them to the tracking issue

- Once the change has been in the wild for at least one cycle, we can stabilize the change, converting those warnings into errors.

Finally, for changes to librustc_ast that will affect plugins, the general policy

is to batch these changes. That is discussed below in more detail.

Tracking issue

Every breaking change should be accompanied by a dedicated tracking issue for that change. The main text of this issue should describe the change being made, with a focus on what users must do to fix their code. The issue should be approachable and practical; it may make sense to direct users to an RFC or some other issue for the full details. The issue also serves as a place where users can comment with questions or other concerns.

A template for these breaking-change tracking issues can be found below. An example of how such an issue should look can be found here.

The issue should be tagged with (at least) B-unstable and T-compiler.

Tracking issue template

This is a template to use for tracking issues:

This is the **summary issue** for the `YOUR_LINT_NAME_HERE`

future-compatibility warning and other related errors. The goal of

this page is describe why this change was made and how you can fix

code that is affected by it. It also provides a place to ask questions

or register a complaint if you feel the change should not be made. For

more information on the policy around future-compatibility warnings,

see our [breaking change policy guidelines][guidelines].

[guidelines]: LINK_TO_THIS_RFC

#### What is the warning for?

*Describe the conditions that trigger the warning and how they can be

fixed. Also explain why the change was made.**

#### When will this warning become a hard error?

At the beginning of each 6-week release cycle, the Rust compiler team

will review the set of outstanding future compatibility warnings and

nominate some of them for **Final Comment Period**. Toward the end of

the cycle, we will review any comments and make a final determination

whether to convert the warning into a hard error or remove it

entirely.

Issuing future compatibility warnings

The best way to handle a breaking change is to begin by issuing future-compatibility warnings. These are a special category of lint warning. Adding a new future-compatibility warning can be done as follows.

#![allow(unused_variables)] fn main() { // 1. Define the lint in `src/librustc/lint/builtin.rs`: declare_lint! { pub YOUR_ERROR_HERE, Warn, "illegal use of foo bar baz" } // 2. Add to the list of HardwiredLints in the same file: impl LintPass for HardwiredLints { fn get_lints(&self) -> LintArray { lint_array!( .., YOUR_ERROR_HERE ) } } // 3. Register the lint in `src/librustc_lint/lib.rs`: store.register_future_incompatible(sess, vec![ ..., FutureIncompatibleInfo { id: LintId::of(YOUR_ERROR_HERE), reference: "issue #1234", // your tracking issue here! }, ]); // 4. Report the lint: tcx.lint_node( lint::builtin::YOUR_ERROR_HERE, path_id, binding.span, format!("some helper message here")); }

Helpful techniques

It can often be challenging to filter out new warnings from older, pre-existing errors. One technique that has been used in the past is to run the older code unchanged and collect the errors it would have reported. You can then issue warnings for any errors you would give which do not appear in that original set. Another option is to abort compilation after the original code completes if errors are reported: then you know that your new code will only execute when there were no errors before.

Crater and crates.io

We should always do a crater run to assess impact. It is polite and considerate to at least notify the authors of affected crates the breaking change. If we can submit PRs to fix the problem, so much the better.

Is it ever acceptable to go directly to issuing errors?

Changes that are believed to have negligible impact can go directly to issuing

an error. One rule of thumb would be to check against crates.io: if fewer than

10 total affected projects are found (not root errors), we can move

straight to an error. In such cases, we should still make the "breaking change"

page as before, and we should ensure that the error directs users to this page.

In other words, everything should be the same except that users are getting an

error, and not a warning. Moreover, we should submit PRs to the affected

projects (ideally before the PR implementing the change lands in rustc).

If the impact is not believed to be negligible (e.g., more than 10 crates are affected), then warnings are required (unless the compiler team agrees to grant a special exemption in some particular case). If implementing warnings is not feasible, then we should make an aggressive strategy of migrating crates before we land the change so as to lower the number of affected crates. Here are some techniques for approaching this scenario: